2022-04-07: The code snippets in this post reference an older version of the examples repo prior to the release of Daily React Hooks. Any code not using Daily React Hooks is still valid and will work; however, we strongly suggest used Daily React Hooks in any React apps using Daily. To see the pre-Daily hooks version of the examples repo, please refer to the pre-daily-hooks branch. To learn more about Daily React Hooks, read our announcement post.We’re excited to announce the beta launch of our newest API, which lets developers add live transcription to a Daily call. With this new API, developers building with Daily’s call object can generate call transcripts in real-time, with upwards of 90% accuracy.

If you’re interested in adding transcription to a Daily call today, you can skip ahead to our tutorial. Or read on to learn about how customers are using live transcription today, and why we’ve partnered with Deepgram for the development of this API.

Organizations across verticals use live transcription to support transparency, analytics, and to help improve accessibility. Some of the use cases we see for transcription today include:

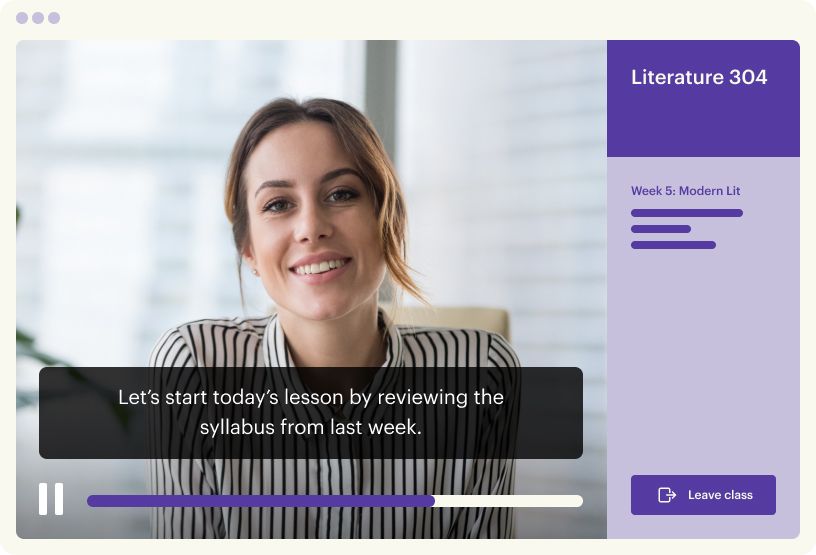

- Online classes, where live transcription helps support student learning, and improves accessibility for the deaf, hard of hearing, learning disabled, and ESL learners.

- Training and coaching customer service agents, where call transcriptions provide insight into customer conversations and illuminate areas for improvement.

- Transcribing meetings, for improved accessibility and engagement, and to generate an instant library of searchable, shareable action items.

For the development of this API, we partnered with Deepgram, whose Automatic Speech Recognition technology generates high-accuracy transcripts with less than <300ms latency. Using 100% deep-learning technology, Deepgram’s transcription models can be trained to recognize unique speech patterns, accents, and vocabulary, including industry-specific acronyms or terminology. The result is more accurate, reliable transcription, trusted by developers behind companies such as NASA, Nvidia, and ConvergeOne.

We’ve extensively tested this API during a closed beta and we’ll be rolling out transcription support to Daily Prebuilt users later in the quarter. In the meantime, we’d love to hear from developers who test transcription in their custom apps about their users’ experience.

Set up transcription tools and (if you want) Daily’s demo repo

Before we dive in, be sure to sign up for accounts with Daily if you haven’t done so already. Real-time transcription is billed at a per unmuted participant minute.(daily.co/pricing/)

Once you have a Daily account, you’ll need a Daily room. You can create one from the Daily Dashboard or with a POST request to the `/rooms` endpoint. Make note of your Daily API key in the Developers section of the dashboard (you’ll need it if you run the demo).

Now that you have a Daily room, feel free to get building, or read on for a step-by-step tutorial highlighting our demo repository

Testing with Daily's demo repo

To run the repo locally, fork and clone, use the env.example to set your DAILY_API_KEY and DAILY_DOMAIN in .env.local, and then:

yarn

yarn workspace @custom/live-transcription devJoin a meeting call as an owner to enable transcription

Only meeting owners can turn on transcription. Daily identifies meeting owners as participants who join a Daily room with a corresponding meeting token that has the is_owner property set to true.

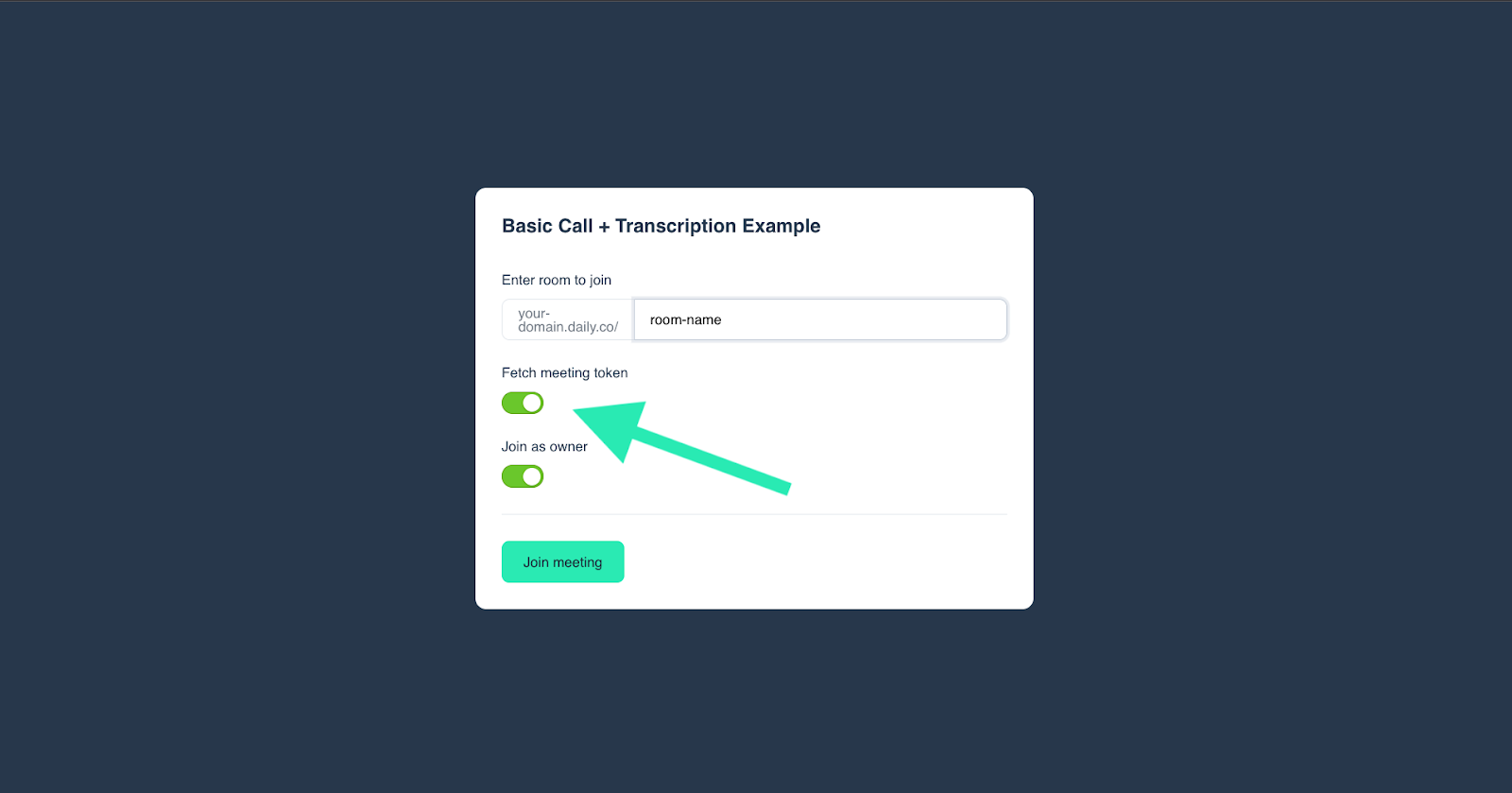

In the demo app, enter a room URL, and then toggle Fetch Meeting Token > Join as Owner:

The demo app creates a token using a Next API route. Those weeds are beyond the scope of this post, but feel free to explore the shared Next endpoint and where it’s called in the source code, or to reference our previous post that focused on Next API routes for an overview.

There are many ways to generate meeting tokens, including a traditional server, a Glitch server, self-signed tokens, or separate forms, to name a few. Choose whatever is best for your app. Just make sure that is_owner is set to true for the participants you want to be able to turn on transcription.

Click "Join meeting", and then in the call tray click Transcript > Start transcribing

Give it a few seconds, and you should see your words displayed on the screen. Let’s look at how that happens.

Call startTranscription() on the call object to start transcribing

The "Start transcribing" button in the demo app calls the Daily startTranscription() method on click:

// TranscriptionAside.js, abridged snippets

async function startTranscription() {

setIsTranscribing(true);

await callObject.startTranscription();

}

// Other things here

<Button

fullWidth

size="tiny"

disabled={isTranscribing}

onClick={() =>

startTranscription()

}

>

{isTranscribing ? 'Transcribing' : 'Start transcribing'}

</Button>

We could have designed the demo to start transcribing as soon as a call is joined. As a courtesy to call participants, instead we required an owner to explicitly start transcribing to notify attendees that their words will be recorded. Participants who join a call after transcription has started see an indicator on the “Transcript” icon in the call tray to view the transcription in progress.

The demo app also listens for the transcription-started event and logs a message to the console.

// TranscriptionProvider.js, abridged snippet

const handleTranscriptionStarted = useCallback(() => {

console.log('💬 Transcription started');

setIsTranscribing(true);

}, []);

// Other things here

callObject.on('transcription-started', handleTranscriptionStarted);You could listen for this event to respond in other ways, like to change the UI.

Listen for messages from Deepgram and render the transcription

Deepgram sends a message with each transcription in the form of an app-message.

The demo app listens for app-message events where the fromId is transcription and the data exists and has an is_final property to indicate a probable pause, so "sentence-like" phrases. The app then updates a transcriptionHistory state value to include each new record:

// TranscriptionProvider.js, abridged snippet

const handleNewMessage = useCallback(

(e) => {

const participants = callObject.participants();

// Collect only transcription messages, and gather enough

// words to be able to post messages at sentence intervals

if (e.fromId === 'transcription' && e.data?.is_final) {

// Get the sender's current display name or the local name

const sender = e.data?.session_id !== participants.local.session_id

? participants[e.data.session_id].user_name

: participants.local.user_name;

setTranscriptionHistory((oldState) => [

...oldState,

{ sender, message: e.data.text, id: nanoid() },

]);

}

setHasNewMessages(true);

},

[callObject]

);

// Other things here

callObject.on('app-message', handleNewMessage);

To render the transcription, the TranscriptionAside component maps over transcriptionHistory:

// TranscriptionAside.js, abridged snippet

<div className="messages-container" ref={msgWindowRef}>

{transcriptionHistory.map((msg) => (

<div key={msg.id}>

<span className="sender">{msg.sender}: </span>

<span className="content">{msg.message}</span>

</div>

))}

</div>Again, that’s just how this demo does things! There are many options for how to handle and render transcription messages, as long as you’re listening for app-message events with a fromId of transcription. Here’s another example that logs transcriptions to the console:

callObject.on('app-message', (msg) => {

if (msg?.fromId === 'transcription' && msg.data?.is_final) {

console.log(`${msg.data.user_name}: ${msg.data.text}`);

}

})Listen and respond to transcription errors

The transcription-error event fires whenever there has been a problem with transcription. To get notified when that happens, set up a listener. The demo app, for example, logs a message to the console and updates app state:

// TranscriptionProvider.js, abridged snippet

const handleTranscriptionError = useCallback(() => {

console.log('❗ Transcription error!');

setIsTranscribing(false);

}, []);

// Other things here

callObject.on('transcription-error', handleTranscriptionError);Call stopTranscription() on the call object to end the transcription

stopTranscription() closes the connection between Daily and Deepgram, ending the transcription. In the demo app, the "Stop transcribing" button calls the function:

// TranscriptionAside.js, abridged snippet

async function stopTranscription() {

setIsTranscribing(false);

await callObject.stopTranscription();

}

// Other things here

<Button

fullWidth

size="tiny"

disabled={!isTranscribing}

onClick={() =>

stopTranscription()

}

>

Stop transcribing

</Button>Like with startTranscription(), a corresponding transcription-stopped event is emitted on stopTranscription(). If the method is not called, transcription ends automatically when a Daily call ends.

After the transcript has rolled

There you have it! All the tools to turn your spoken words into written ones with Daily. For full documentation, check out domain config, startTranscription(), stopTranscription(), and transcription events.

Storing your transcript

Real-time transcripts are by default not stored anywhere. You can enable storage on Daily or a custom S3 bucket – learn more about this here.