Daily powers real-time audio and video for millions of people all over the world. Our customers are developers who use our APIs and client SDKs to build audio and video features into applications and websites.

This week at Daily, we are writing about the infrastructure that underpins everything we do. This infrastructure is mostly invisible when it is working as intended. But it's critically important and we're very proud of our infrastructure work.

Our kickoff post this week goes into more detail about what topics we’re diving into and how we think about our infrastructure’s “job to be done.” Feel free to click over and read that intro before (or after) reading this post. You also can listen to our teammate Chad, a Solutions Engineer here at Daily, who's been sharing videos on topics throughout the week.

Today we'll talk about recording. Our recording APIs support a pretty wide range of recording use cases, including use cases for which low cost and simplicity are the most important “features.” But we'll focus in this post on the design of our Video Component System — a toolkit for creating dynamic recordings — and the infrastructure that makes VCS work.

Our goal with the Video Component System is to expand the boundaries of what's possible in recording live video experiences. You can use VCS to build full-featured mini-applications that run on Daily's recording servers in the cloud.

Recordings that are as engaging as a live video experience

Daily's original focus was WebRTC video. We created one of the earliest WebRTC developer platforms and have been helping customers build video applications since 2017.

Our experience with real-time video has influenced how we think about recording. In real-time video apps, features like dynamic layouts, emoji reactions, shared whiteboard canvases, text chat, text and graphical overlays, and animated UI elements are common.

Recordings benefit from engaging, event-driven, highly designed visual elements too! If recordings of live experiences are missing some of the context provided in the live experience UI, that makes recordings less useful than they could be. So our recording APIs and infrastructure have evolved based on what we've seen our customers build in their real-time apps.

For example, a live fitness class might have countdown timers and “toasts” that the instructor can trigger from a menu of presets. Recordings of these live classes make great on-demand content. And the recordings are an even better experience if they include the countdown timers and the text overlay toasts from the live classes.

Similarly, online virtual events are often managed by a producer who can do things like control the on-screen layout (spotlighting individual speakers or showing a grid of panelists), invite audience members “to the stage” to ask questions, and run interactive audience activities such as polls. Recordings of these events can — and should — include the same layout changes, poll results, and other dynamic elements as the live stream.

Building blocks for dynamic recordings

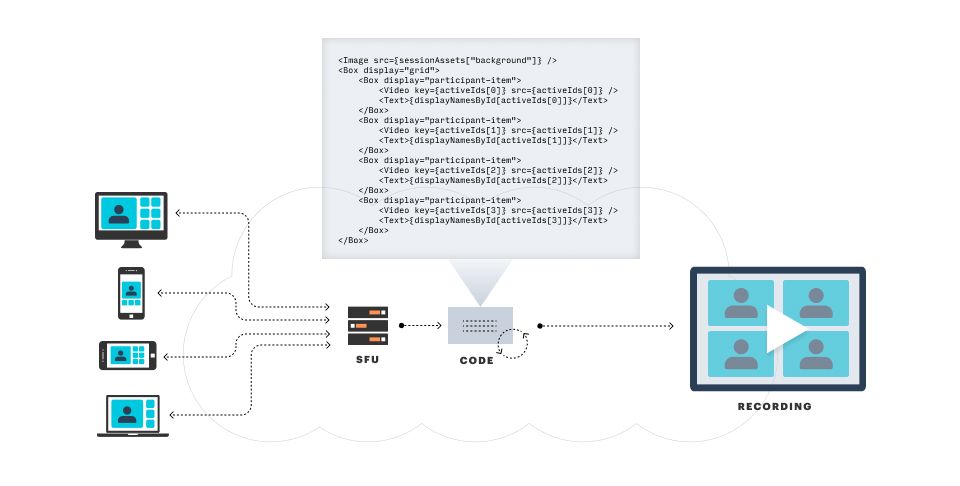

To incorporate these custom, dynamic elements into recordings, we need a way for application developers to write code that can run inside the Daily media processing “pipelines” that create recordings.

Developers using our JavaScript, iOS, and Android SDKs are already writing application code that creates rich, event-driven, video-centric user interfaces. Can we just host a version of that application code [waves hands] somewhere in the cloud?

One approach would be to use a headless web browser on a virtual machine, point that browser at a special “recording UI” web app, and capture the output. Most people who are building a WebRTC video recording framework for the first time go down this road. But it turns out that headless web browsers don't work well for recording production-quality video because web browsers weren't designed with this use case in mind. (We've written about the advantages and disadvantages of various approaches to recording, so feel free to follow that link if more details are of interest.)

So we can't use a headless web browser, but we want to make it easy to write modular, reusable code that runs on Daily's cloud infrastructure. Let's take a step back and define some requirements.

We need:

- robust guarantees about rendering quality, especially frame rate

- low computation (and other) costs so that recording can be priced affordably

- reliable code execution and good debugging tools

We'd also like:

- a programming language and environment that is familiar to many developers

- good modularity so we can provide base implementations of core functionality that are easy for developers to extend

- code reuse between our recording and client SDK environments

Pauli Ojala, who leads the development of our recording toolkit work at Daily, had the insight that we could design a web-like development environment that has some of the benefits of the headless browser approach (familiarity to developers, code reuse) while also providing guarantees that are important for production-quality video output (predictable frame rate and rendering characteristics, cost-effective scaling, better security sandboxing).

We call this web-like development environment for dynamic recordings our Video Component System. Here's how our documentation describes it:

What is VCS?

VCS is a video-oriented layout engine based on pure JavaScript functions that can be used to generate layouts, known as “compositions”. Compositions let developers turn Daily calls into engaging streamed and recorded content. For developers building live streaming and cloud recording features into their apps, VCS lets developers quickly and easily:

- Create and apply video grids and other custom layouts

- Render animated overlay graphics

- Customize rendering and layout based on the user's platform and device

What can you build with VCS?

VCS makes it easy to add advanced cloud recording features to your real-time app. Some key ways we see customers using VCS include:

- Building a recording “studio” for streamers and creators

- Hosting interactive, multiparty virtual events (such as backstage Q&A), which are broadcast live to a wider audience via YouTube and Facebook Live

- Creating high-quality recorded content from live events (e.g. an online fitness studio or edtech platform that wants to create an archive of recorded classes)

If the part about “pure JavaScript functions” made you think of React, that's not an accident. Most web developers today are familiar with React's model of (mostly) stateless UI components. We've borrowed this core concept from React, along with React's JSX syntax.

Here's an example VCS component that provides a starting point for an animated transparent image overlay:

export default function CustomOverlay() {

// our animation state

const animRef = React.useRef({

t: -1,

pos: {

x: Math.random(),

y: Math.random(),

},

dir: {

x: 1,

y: 1,

},

});

const src = 'test_square';

const opacity = 0.5;

return (

<Image

src={src}

blend={{ opacity }}

layout={[placeImage, animRef.current.pos]}

/>

);

}

Please note that the above listing doesn't include the placeImage() function that actually positions the <Image>. The standard engineering joke here would be that the definition is left as an exercise to the reader. But actually, we do have a definition for you! The placeImage() function – and many more details – are found in this tutorial that shows how to extend the VCS baseline composition.

The VCS development environment also includes video-specific rendering hooks, a function-based layout engine, support for including content pulled from the web in recordings, full source code for all of the built-in VCS components and scaffolding, and a simulator that lets you explore the many customizable parameters of the VCS baseline composition.

So far, we've concentrated our development efforts on the VCS cloud implementation. But our goal is to make VCS fully cross-platform, so that UI components can be shared between web, iOS, Android, and cloud recording codebases.

Infrastructure

(or, video engineers love pipelines)

In our description of the Video Component System, above, we talked about VCS code running “in the cloud,” and running “inside the media processing pipelines that create recordings.” What does that mean?

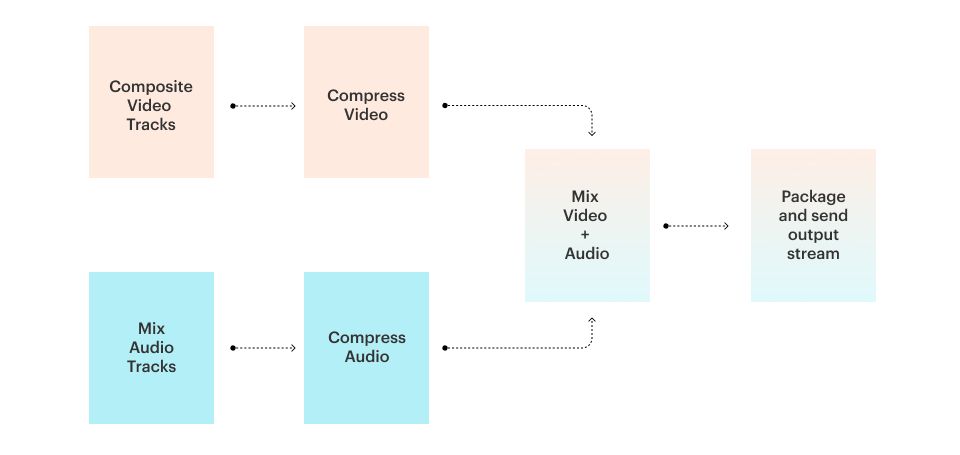

A pipeline is just the series of operations necessary to combine visual and audio elements into a video output track and an audio output track, compress those two tracks, “mux” them together into an output, and send the output to a destination.

Let's flesh out some more details about the two “ends” of the pipeline.

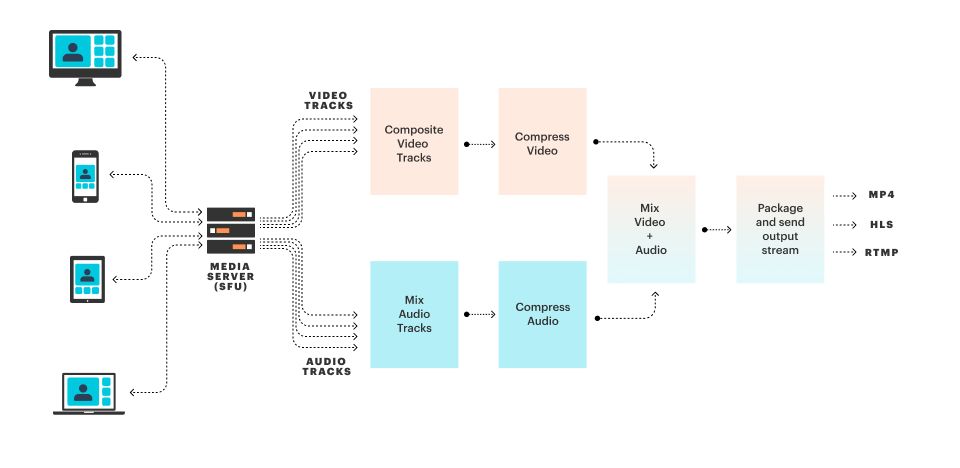

To record a live video session, the first thing we need is access to the video and audio tracks generated by the participants in the call. Recall that a real-time video session is usually managed by a media server. We wrote about media servers in the context of network architecture and video bandwidth management in previous Infrastructure Week posts. For our purposes here, we can just think of media servers as transit points for all of a session's video and audio streams. Our recording pipeline receives audio and video tracks from Daily's media servers.

At the other end, the pipeline delivers the finished recording to a storage bucket or network endpoint. Today, Daily supports three outputs: MP4 to an AWS S3 bucket, HLS to an AWS S3 bucket, and RTMP to any server that accepts an RTMP stream.

Together, these three output options cover the most common recording and live broadcast use cases. MP4 is the most widely supported video file format today. HLS is a newer (and more complex) format that better supports playback over the Internet. RTMP is the network transport used by most “live streaming” services. (For more about HLS and RTMP, see this post on how video formats that support live streaming have evolved over time.)

Now that we understand the ends of the pipeline, let's dig into what happens in the middle, where all the VCS code that defines custom layouts and dynamic elements actually runs.

To turn VCS components into pixels we need to do two things: evaluate the JavaScript (or, really, JSX) component code to create some form of lower-level rendering instructions, and then execute those rendering instructions. This two-step process will be familiar to anyone who has ever taken a computer graphics course or written OpenGL (or DirectX, or Metal) code.

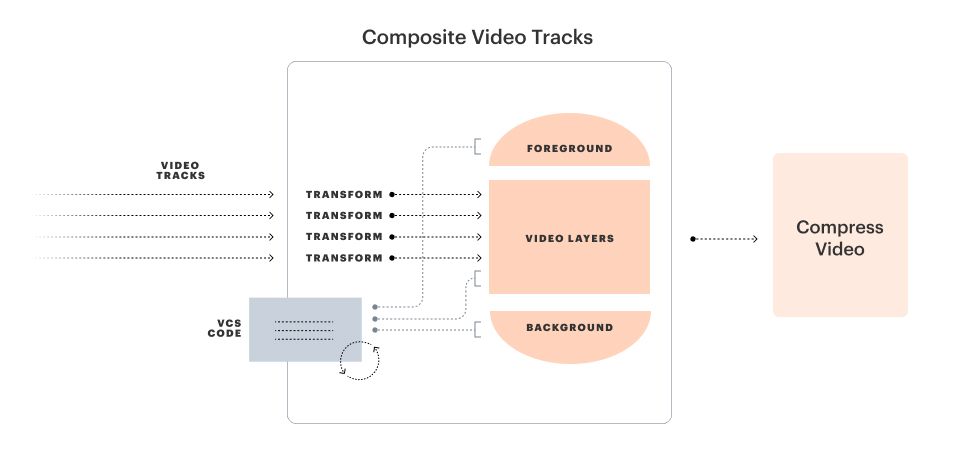

On Daily's recording servers in the cloud, the VCS components run inside a JavaScript sandbox and output a graphics display list. The display list is then rendered by a small, specialized chunk of C++ code that's designed to play nicely with the other parts of the surrounding pipeline.

The nice thing about this architecture is that we can swap out both pieces and run the same VCS components on a different platform – for example, in a local web browser. A web browser already has a JavaScript sandbox available, and our display lists can be rendered to <canvas> and <video> elements in the browser. This is how the VCS simulator is implemented.

If you've read the VCS docs closely, you might have noticed a simplification, above. There are actually three display lists and three rendered outputs. This is the hamburger model of video rendering: background graphics, a video layer, and foreground graphics. Splitting rendering into three layers allows for some helpful optimizations that are relevant to video workflows: keeping RGBA and YUV color space operations separate, and making sure that video frame rates remain consistent. If you have questions (or thoughts, or suggestions) down at this level of detail, please come join us on our Discord community. We love talking about color spaces and rendering optimizations!

There's one other important detail about the pipeline architecture. All of the pieces described so far are implemented in or glued together by the GStreamer framework. GStreamer is a toolkit for building multimedia (you guessed it) pipelines and is widely used in commercial, academic, and open source video and audio software.

GStreamer is a powerful set of tools. To give you a sense of the complexity of a production GStreamer pipeline, here's an automatically produced graph of the elements of (part of) Daily's software infrastructure for recording.

Finally, all of this software has to run on servers somewhere in the cloud.

We currently run clusters of media servers in 11 geographic regions, and recording servers in three regions. Unlike media servers, Daily's recording servers are not particularly sensitive to “first hop” transit latency. So we don't need recording clusters in every region where we host media servers. But we do see slightly increased performance, lower error rates, and lower costs if recording servers are near the media servers that are hosting most of the streams being recorded.

These clusters of recording servers autoscale based on usage volume and have a large margin of spare capacity built into the autoscaling algorithm. Our goal is for any client device, at any time, to be able to call our startRecording() API and have one of our servers spin up to handle their job within, at most, a few seconds.

Conclusion

The API surface area of Daily's Video Component System is quite large. As with all of our APIs, our aim with VCS is to make (lots of) easy things easy to do, and (at least some) hard things possible. We've published a number of tutorials, a guide, and reference docs. If you’re interested in more background on VCS, you might also enjoy reading our post that detailed the original VCS beta release and this dive into some technical aspects of GStreamer and VCS.

We're busy adding capabilities to VCS. If you have a particularly challenging recording use case, we'd love to hear from you. VCS 1.0 is now feature complete and we have a long list of things we're excited to work on for version 2.0. But the most exciting thing of all, for us, is when people build things with our APIs that we never would have thought of ourselves. Let us know what you want to use VCS for.