We’re doing a lot of AI-related work lately at Daily. We’re helping our customers use Daily’s video, audio, and messaging features in combination with generative AI tools. We’re working with partners who are leveraging AI to bring new capabilities to verticals like healthcare, live commerce, and gaming. And we’re adding core features to our SDKs and infrastructure.

In a series of blog posts — Fun with AI — we’re sharing some of the more light-hearted experiments we’re doing with AI tools, and offering quick examples and ideas for using AI with live and recorded video and audio.

Introduction

Meeting assistants powered by large language models are an interesting new development. They’re both useful and fun to play with. So they’re a natural thing to incorporate into a real-time video app.

We are front-end engineers at Daily with a lot of experience building live video applications, libraries, and features. We wanted to see how quickly we could add basic meeting assistant functionality to video calls built on Daily — in our project, we used our hosted call component Daily Prebuilt. This functionality would include the following:

- Client side transcription

- A new prompt inside Daily Prebuilt's text chat

- Code to send the prompt and meeting context to OpenAI's GPT-4 service

- Logic and formatting to display the GPT-4-powered output into Daily Prebuilt's text chat

After a day or so, we had a pretty solid GPT-powered bot integration in our app and a general sense of how we could abstract the underlying APIs into something Daily customers could use in their own application. Here’s our colleague Chad with an intro to what we built:

Daily Senior Engineer Chad looks at Nienke and Christian's AI-powered meeting assistant.

So, how did we get there? To follow along with this blog post you’ll need some basic knowledge of React and JavaScript.

Steps to create the chatbot

We hopped on a call and created the following to-do list:

- Transcribe every part of the conversation in Daily Prebuilt

- Add a

/gptprompt functionality to the chat - When a call participant starts a chat message with

/gpt, send their prompt and the transcript of the meeting as it currently exists to OpenAI - Display GPT’s response in the chat

- Format the response so that it’s clear it’s a bot’s output

Let’s start with transcription. We have a transcription API in daily-js, but for this project, we decided to experiment with the Web Speech API. Implementing Web Speech API transcription in Prebuilt took about half an hour.

We decided to store the transcription in our app’s React state, so we could synchronize it with participants joining the call later (if you’re curious about synchronization in Prebuilt, check out this talk Christian gave at CommCon!). Keep in mind that this approach may not scale effectively for lengthy conversations, but it suited our prototype.

Next up was extending our existing chat component with a new /gpt prompt. We already have a /giphy integration built into Daily Prebuilt, so all we needed to do was extend the existing code, as Chad talks through in the video.

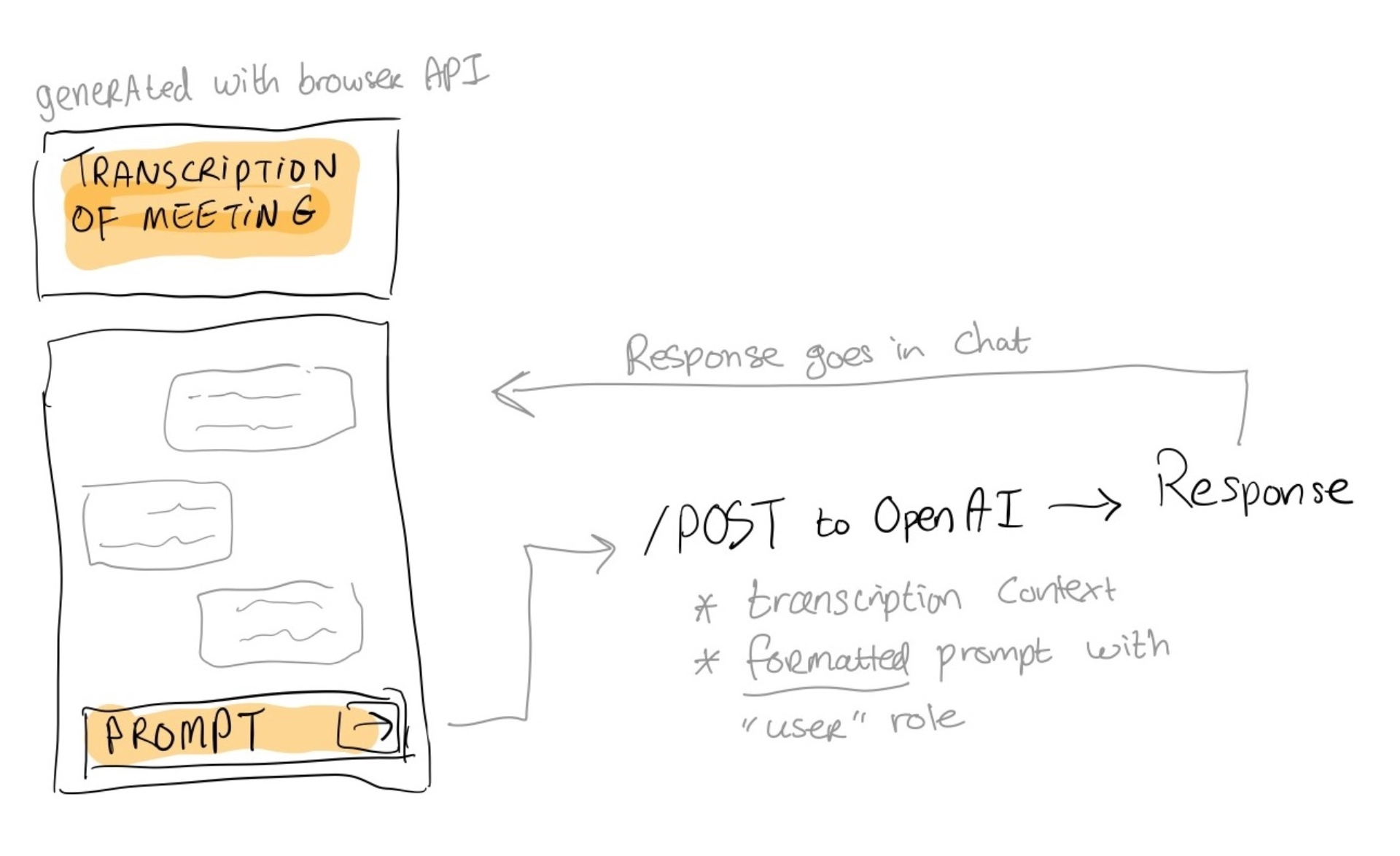

The crux of our meeting assistant logic is sending the prompt, context, and user's chat message to OpenAI and displaying GPT's response as a private message in the chat. To better visualize the process, here’s a diagram we sketched out:

We’re sending the following data to OpenAI:

1. A system prompt: each prompt object sent to the ChatGPT API must also have a role property containing either system, user, or assistant. For every message sent to ChatGPT, we’ll include a prompt with the system role instructing ChatGPT to serve as a helpful video meeting assistant.

2. The user input: the participant’s question (e.g. “what has been discussed in the meeting so far?)

3. Context, consisting of:

- A transcription of the meeting so far

- Chat messages that have been sent by participants

- Chat messages that were ChatGPT questions or responses

Since we’re using NextJS in Prebuilt, we created a new API endpoint to “talk” to OpenAI. Here’s a simplified version:

const handler = async (req, res) => {

const { messages } = JSON.parse(req.body);

try {

const completion = await openai.createChatCompletion({

model: 'gpt-4-32k',

messages,

});

res.status(200).json(completion.data);

} catch (error) {

console.log({ error })

}

};

We built a React hook called useChatBot that interacts with the new API endpoint whenever a /gpt prompt is entered in the chat. Within this hook, we construct the context based on existing transcriptions and chat messages:

const context = [

…systemPrompt, // we just created this in step 1

...allPreviousMessages, // any previous chat messages either sent by participants, or the assistant

.map((currentMessage) => (

{

role: currentMessage.fromId === 'gpt' ? 'assistant' : 'user',

content: `${

currentMessage.fromId !== 'gpt'

? `[${currentMessage.date()}][${

currentMessage.name

}][${'seen' in currentMessage ? 'chat' : 'speech'}]`

: ''

} ${currentMessage.message}`,

})),

];

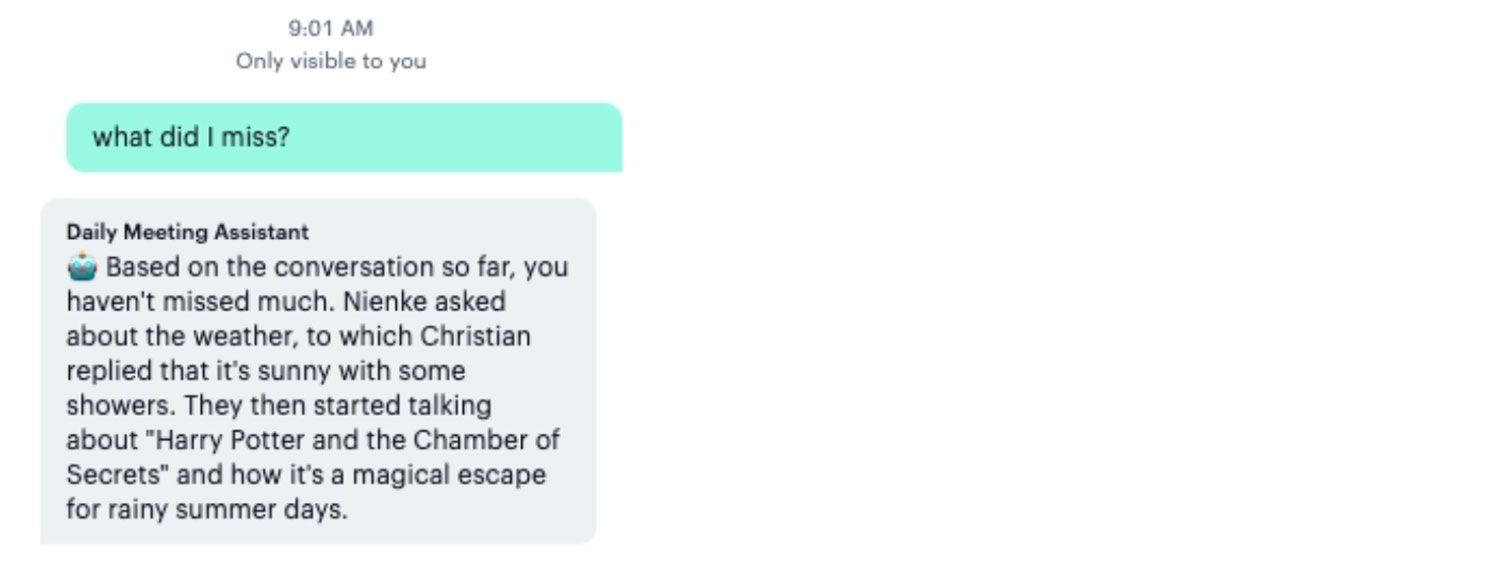

This context, the user input, and the system prompt is sent to our new API endpoint, and returns a response from OpenAI. We put this response in a private chat message:

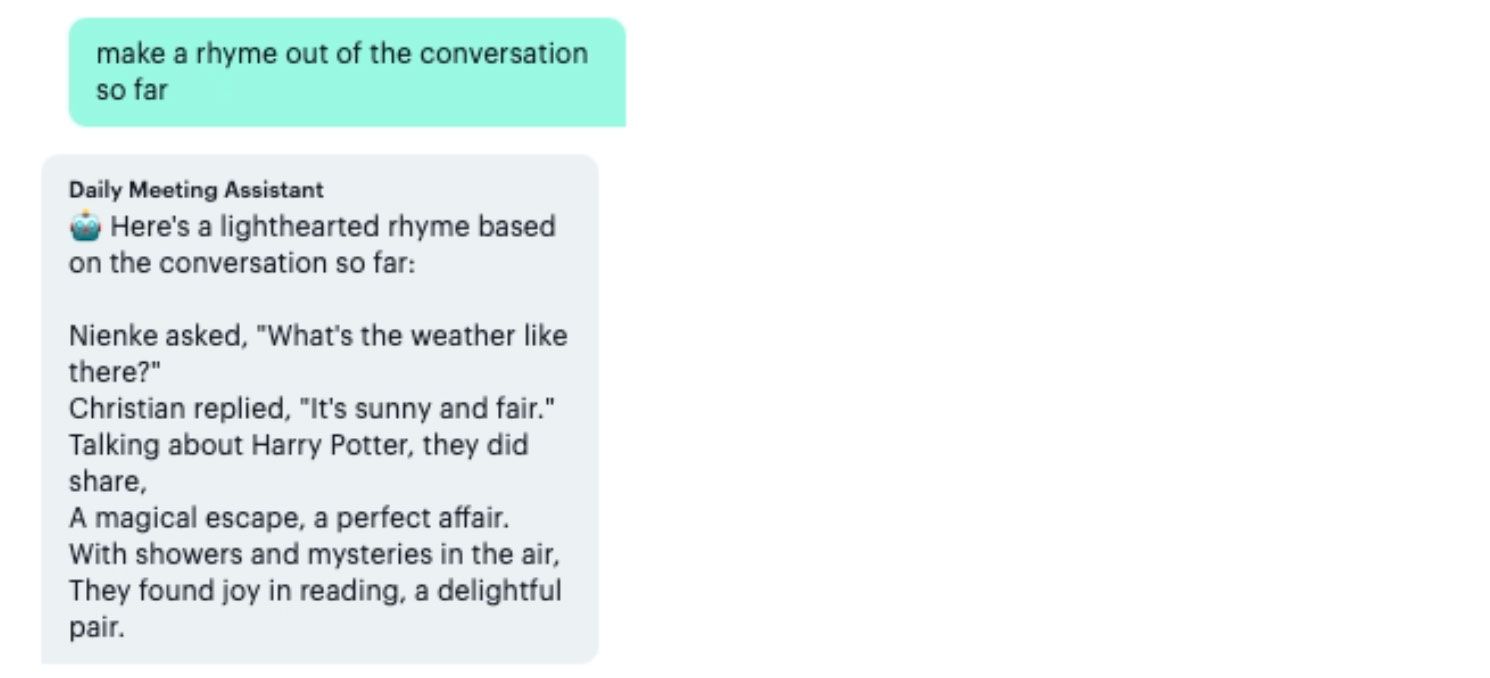

And we’ve got a working chat bot! We can now ask it questions about the meeting so far, but we can also ask it to do something a bit more creative:

How neat is that? We were pretty excited at this point! However, to turn this into a fully-fledged product, we obviously need to do a few other things.

Turning this into a real product

One limitation lies in the context window. GPT-4 has a 32k token limit. This is a lot, but longer transcriptions and chat messages will generate a context containing more tokens than that. To overcome this, we could opt for providing summaries instead of raw transcriptions or explore storing transcripts as vector embeddings in a vector database. Each approach has its pros and cons.

One limitation is local speech recognition - it’s pretty good, but it’s much better to use our Deepgram centralized Daily's built-in real-time transcriptions instead - they’re much more robust.

Running the prompts should probably happen from a backend service, instead of the client’s browser. More on this soon!

Regardless of the limitations, we still built something pretty cool in just over a day!

We'll be back on the blog with more in this series and AI announcements. If you have any questions or comments, or if you’ve built something with AI on top of Daily, head on over to our WebRTC community to keep the conversation going.